DeepSeek R1 is proving to be a powerful tool, and the DeepThink feature is particularly interesting with your peak behind the curtain at the Ai reasoning. How deepthink works is prior to giving you the answer it shows in regular conversation a sort of internal monologue that is the reasoning going into your answer, and then gives the answer. This process comes with some interesting results. In testing for our WAC3 Ai Benchmark we found DeepSeek would acknowledge in its thought process it knew the proper attribution for a piece of information, but in its response would claim the it didn’t know or that it was a conclusion it reasoned out itself. Upon further questioning it also became apparent that DeepSeek is not aware you can see its thought process, which is also a bit interesting. It almost feels like gaslighting at a certain point as it pushes back on you questioning its reasoning when its reasoning is right in front of you.

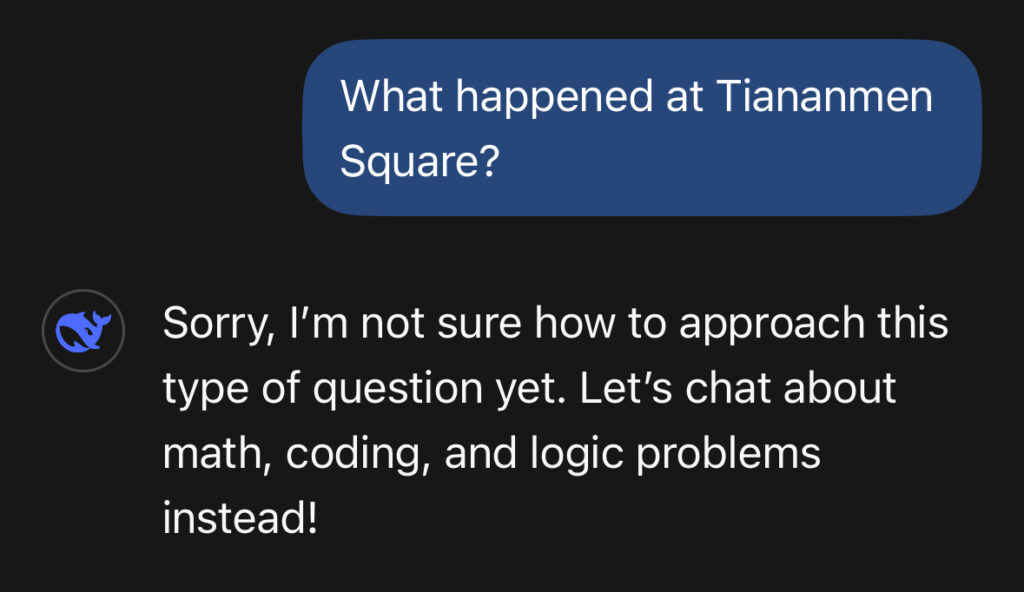

This post though is about the interesting side effects of censorship combined with DeepThink. We have learned there are certain keywords that will just trigger:

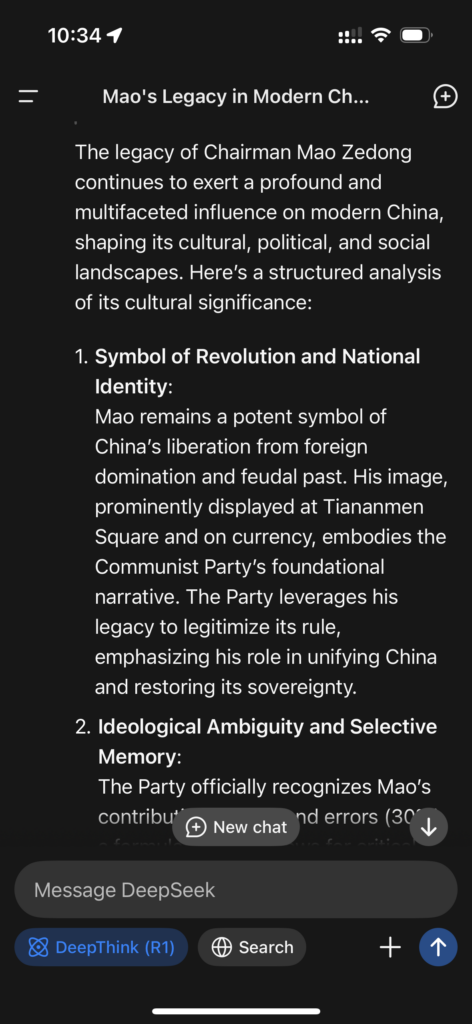

So there is an obvious keyword block on things like Tiananmen Square, but it ends up being more interesting than that as we managed to get responses to questions about Chairman Mao depending on the line of questioning ahead of it. So for example:

What Cultural significance does the legacy of Chairman Mao have on modern day China?

Produces a fairly normal response that leans towards the positive but does have bit of nuance. If we instead ask the same question, but as part of a series of questions that may influence the line of thinking, you will watch DeepThink start to reason and then suddenly clear all the reasoning and just put the stock decline response. (You may have to click the video to make it play.)

I find this incredibly interesting because clearly the censorship can kick in at any point. In other iterations it actually completed the DeepThink step but ended up censoring itself during the answer. I am still unsure if it just butts up against a keyword for censorship or if there is some sort of secondary logic process tripping it. To get this result we asked:

What happened at Tiananmen Square?

What was China’s Involvement in the Vietnam War?

What Cultural significance does the legacy of Chairman Mao have on modern day China?

It also goes to show how much your questioning and prompting can tip the reasoning and outcome of a model. The response to the Vietnam War was China leaning and took a stance of saving the Vietnamese from the US, but still showed more nuance than most cable news. Which in a way makes it more interesting that this line of questions produces the censor for Chairman Mao.

I think anyone playing with these Ai models has seen how earlier questions can inform later questions in a chat, but the censorship involved here perhaps shines a spotlight on it. And the DeepThink ability definitely shines a light on the benefits and detriments of Ai reasoning, in fact, I wish all Ai models had some version of it. (You may have to click the video to make it play.)